How to Efficiently Manage Azure Data Factory Costs?

Managing cloud service expenses, such as those for Azure Data Factory (ADF), is essential to maximize return on investment and operating efficiency. Organizations can integrate, transform, and load data from multiple sources into data lakes or data warehouses with the help of Azure Data Factory, a robust platform for creating and managing data pipelines. Nevertheless, employing ADF may result in unforeseen costs if appropriate cost-control techniques aren't used. We'll explore recommended practices in this article to successfully manage expenses and make the most of its capabilities.

Table of Contents

- Understanding Azure Data Factory Costs and Pricing

- Best Practices for Optimizing Azure Data Factory Costs

- Conclusion

- People Also Ask

Understanding Azure Data Factory Costs and Pricing

Azure Data Factory operates based on a pay-as-you-go pricing model, which means users are charged for the resources they consume. The key cost factors to consider include:

Data Integration Units (DIUs)

DIUs are the unit of measurement used by ADF for resource use. They are units that contain a mix of CPU, memory, and network resources that are used in ADF pipelines for data transfer and transformation operations. Azure Data Factory assigns them to carry out data integration operations, like moving data between databases, converting data formats, and carrying out intricate data manipulations.

The complexity and size of your data operations determine how many DIUs are needed. Higher allocations could be required for more resource-intensive tasks like processing large-volume data streams or performing large-scale data transformations. Since they have a direct impact on resource consumption, cost management depends on allocating resources as efficiently as possible depending on workload demands. Appropriately sizing DIUs guarantees effective resource use while reducing needless costs.

Data Movement Activities

Data migration activities are a significant additional cost component in Azure Data Factory. The amount of data that is moved across different data stores (such as Azure Blob Storage, Azure SQL Database, and AWS S3) during pipeline runs determines how much it will cost. Azure Data Factory computes data transportation expenses by utilizing the processed or replicated data volume, commonly expressed in gigabytes (GB) or terabytes (TB).

To reduce the expense of data movement:

- Reduce the amount of data before transferring it by using data compression techniques.

- To take advantage of cheaper pricing tiers, plan data movement for off-peak times.

- Reduce pointless data transfers by optimizing the design of the data flow.

Optimizing performance and reliability can be achieved while providing significant cost savings through the effective management of data transportation activities.

Debugging Data Flow

The cost of debugging data flows in Azure Data Factory is a function of execution time. To effectively detect and fix errors, developers can use debugging mode when troubleshooting pipelines or checking data transformations. However, because longer debugging sessions need more resources, they may result in higher expenditures.

To cut down on debugging expenses:

- Keep debugging sessions restricted to particular activities or crucial phases.

- Make use of the logging and monitoring options to quickly find and diagnose problems.

- Streamline troubleshooting procedures by implementing effective debugging techniques.

Optimizing Azure Data Factory utilization requires striking a balance between the necessity for extensive testing and financial concerns.

External Service Calls

To improve data processing capabilities, Azure Data Factory allows interaction with external services and interfaces. On the other hand, using outside services inside pipes costs more money. Using third-party connectors for specialized data processes, contacting APIs, or executing Azure Functions are a few examples.

To control the price of calls for outside services:

- Determine whether outside assistance is required for each particular task.

- Minimize pointless API calls and data transfers by optimizing service utilization.

- When possible, take advantage of inherent ADF functionalities to lessen your dependency on outside services.

Azure Data Factory's total cost-effectiveness and resource optimization are influenced by comprehending and optimizing the costs associated with external service integrations.

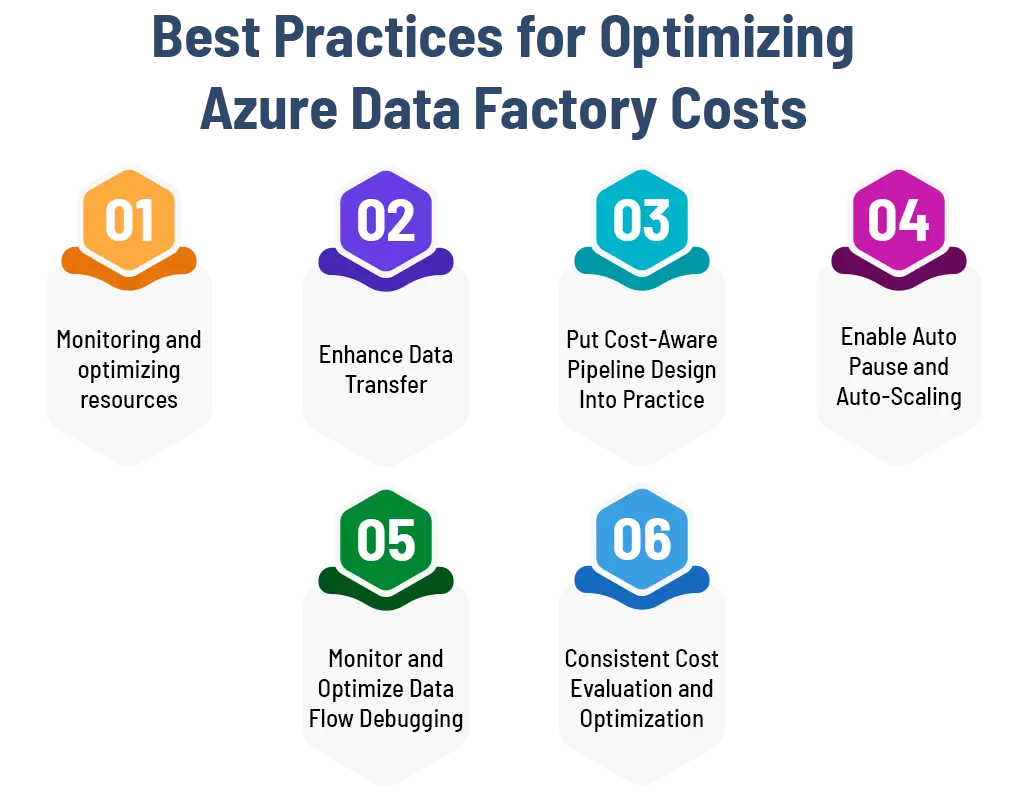

Best Practices for Optimizing Azure Data Factory Costs

Monitoring and optimizing resources

- Make Use of Azure Monitor: It is used to keep tabs on pipeline executions, track resource usage, and spot performance bottlenecks. This transparency aids in cost-intensive activity identification and resource allocation optimization.

- Right-Size Compute Resources:Compute resources (DIUs) should be scaled according to the real workload requirements in order to ensure proper sizing. Steer clear of overprovisioning to cut down on wasteful expenses.

Enhance Data Transfer

- Use Efficient Data Compression: Lower data movement expenses by using data compression techniques to minimize the amount of data transported between sources and destinations.

- Plan Off-Peak Data Transfers: To benefit from cheaper pricing tiers for data transportation, plan data integration tasks for off-peak hours.

Put Cost-Aware Pipeline Design into Practice

- Utilize Effective Activities: Select data processing operations and transformations that are economical. When possible, choose native ADF activities to reduce the need for outside services.

- Divide Big Workloads: To disperse processing loads and maximize resource use, divide huge datasets using partitioning techniques.

Enable Auto-Pause and Auto-Scaling

- Auto-Pause Idle Resources: Turn on auto-pause settings to stop Azure Data Factory resources while they're idle. This will cut expenses by preventing needless resource utilization.

- Workload Auto-Scaling: Set up auto-scaling according to workload demand to optimize expenses and dynamically modify resource allocation.

Monitor and Optimize Data Flow Debugging

- Limit Debugging Duration: To cut expenses and minimize the amount of time spent troubleshooting data flow. Make use of logging and monitoring tools and efficient debugging techniques.

Consistent Cost Evaluation and Optimization

- Examine Cost data: To find areas for cost optimization and reduction, examine cost data and consumption trends on a regular basis.

- Establish Budget Controls: To proactively control Azure Data Factory expenditures and avoid unforeseen expenses, set budget alerts and thresholds.

Partnering with a Custom Software Development Company for Cost-Effective Solutions!

Proficiency in cloud architecture and data engineering is necessary for the successful implementation of cost control techniques for Azure Data Factory. Getting in touch with a respectable custom software development company that specializes in IT outsourcing and Azure services will offer insightful advice and assistance for maximizing Azure Data Factory expenses. These businesses use best practices to increase efficiency and cost-effectiveness while providing customized solutions that meet company objectives.

Conclusion

Businesses using cloud-based data integration and analytics must effectively manage Azure Data Factory costs. Businesses can maximize the value generated from Azure Data Factory, optimize resource use, and cut down on needless expenses by putting the best practices described in this article into practice. Partnering with seasoned IT outsourcing companies can boost cost-effectiveness even more and guarantee the successful deployment of Azure Data Factory solutions customized to business needs.

People Also Ask

- What elements affect the price of Azure Data Factory?

Data Integration Units (DIUs) used for data movement and transformation, the amount of data transferred during data movement activities, costs incurred during data flow debugging, and costs related to using external services or connectors are some of the factors that affect the costs of Azure Data Factory.

- How may Azure Data Factory costs be optimized?

Reduce the cost of Azure Data Factory by keeping an eye on resource usage, properly sizing computational resources (DIUs), applying data compression strategies, planning off-peak data transfers, turning on auto-pause and auto-scaling, restricting data flow debugging, and making the most use of external services.

- How can I efficiently track and manage the charges of Azure Data Factory?

By analyzing cost reports and usage trends on a regular basis, establishing budget alerts and thresholds, it is important to put resource optimization techniques and cost-aware pipeline design into practice. Working with seasoned IT outsourcing firms to find economical solutions, you can keep an eye on and manage the expenses associated with Azure Data Factory.

- Within Azure Data Factory, what are the best strategies for moving data at a reasonable cost?

Implementing partitioning algorithms for huge datasets, scheduling data movement during off-peak hours, and improving data flow design to prevent needless transfers are some of the best practices for cost-effective data movement.

- How may Azure Data Factory cost management be improved by working with a custom software development company?

By working with a custom software development company that specializes in Azure services, you can gain access to knowledge of data engineering and cloud architecture. Customized solutions that are in line with particular cost-optimization objectives, the application of efficient best practices, and proactive monitoring and support for Azure Data Factory deployments.